1. Introduction

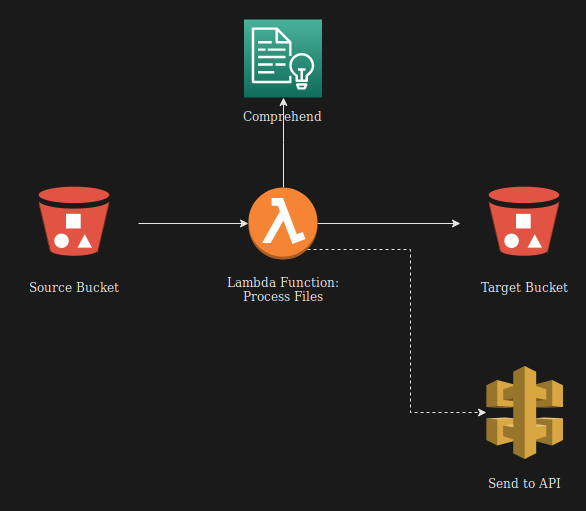

We're going to make a simple serverless app.

The app will process text files, enriching them with sentiment analysis and key-phrase detection.

The app is going to made up of two S3 buckets: a source bucket where we’ll upload text files and a target bucket which stores the processed data.

We’ll process the data with a Lambda function that reads the file from the source bucket, calls the Comprehend service and then writes the results to a target S3 bucket.

As a bonus, you can send your processed data to an extra API to aggregate results!

Important! First check you can log into the AWS console, once you’ve logged in change the Region (in the top right) to London (eu-west-2)

2. S3

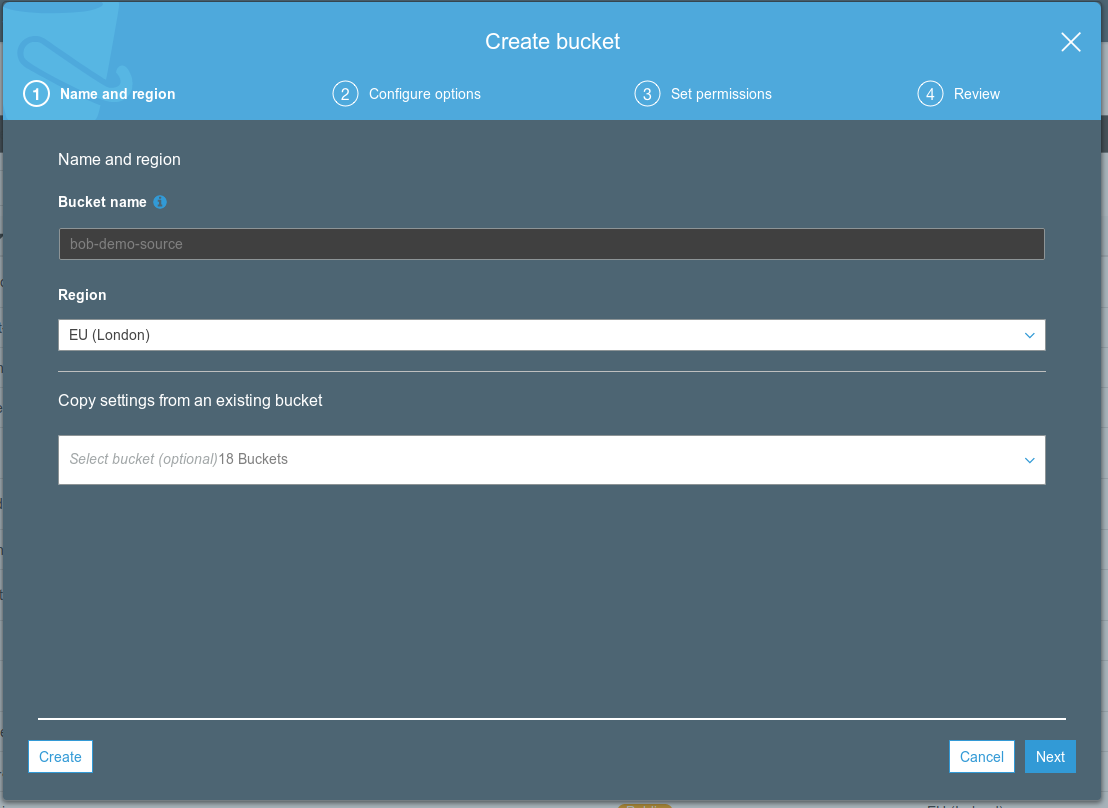

Create two S3 buckets.

In the AWS console, go to S3

Create 2 buckets:

- one for uploading text files

- one for uploading storing the processed data

All S3 buckets must be uniquely named globally and follow DNS naming conventions, so call your bucket something like <firstname>-demo-source.

You can accept all of the other defaults for now.

3. Lambda

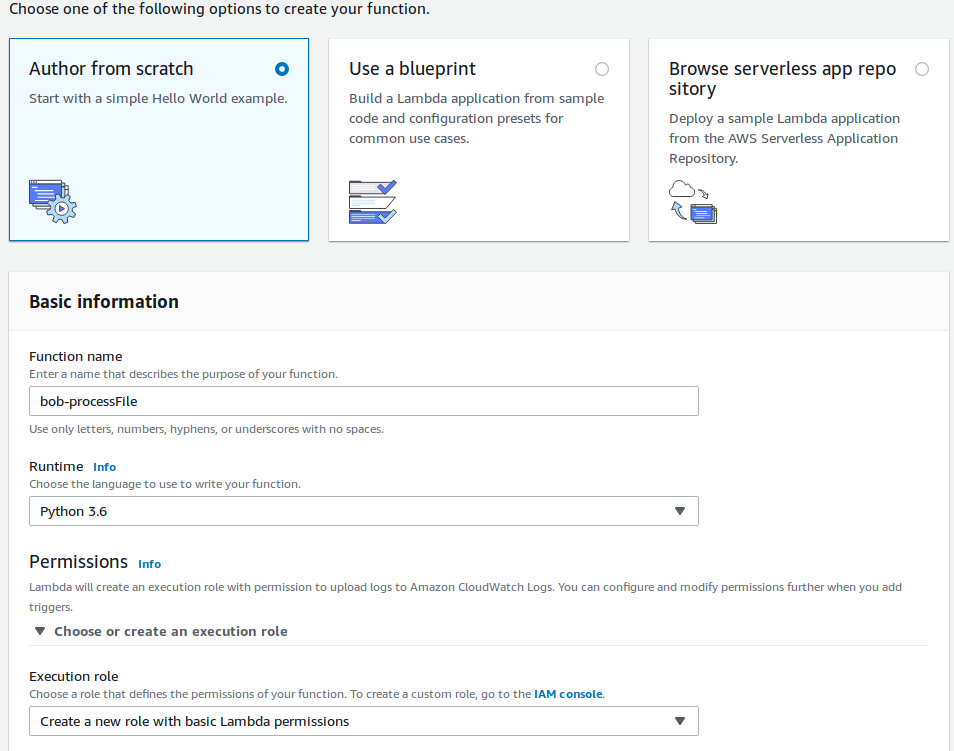

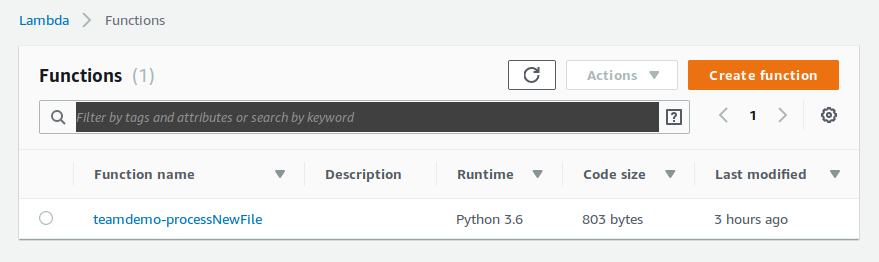

Create a Lambda function.

In the AWS Console, go to Lambda

Create a new function

- Select

Author from scratch - Call your function something you’ll recognise, e.g.

<firstname>-processFile - Choose

Python 3.xas the runtime to be able to use the code snippets provided

If you expand the

Permissionsitem, you’ll see the default is toCreate a new role with basic Lambda permissionsfor your function - that’s a good thing!We’ll edit the role later.

- Select

Add a trigger that invokes your function by selecting S3 on the right under

triggersin theDesignerConfigure the S3 trigger to fire off new files being added to your source bucket

Make sure you click

Addon the trigger configurationScroll down past your code to the Basic Settings section. Change the Timeout to 6 seconds

Save your lambda function (the orange button, top-right)

4. Security

Configure Security Properly.

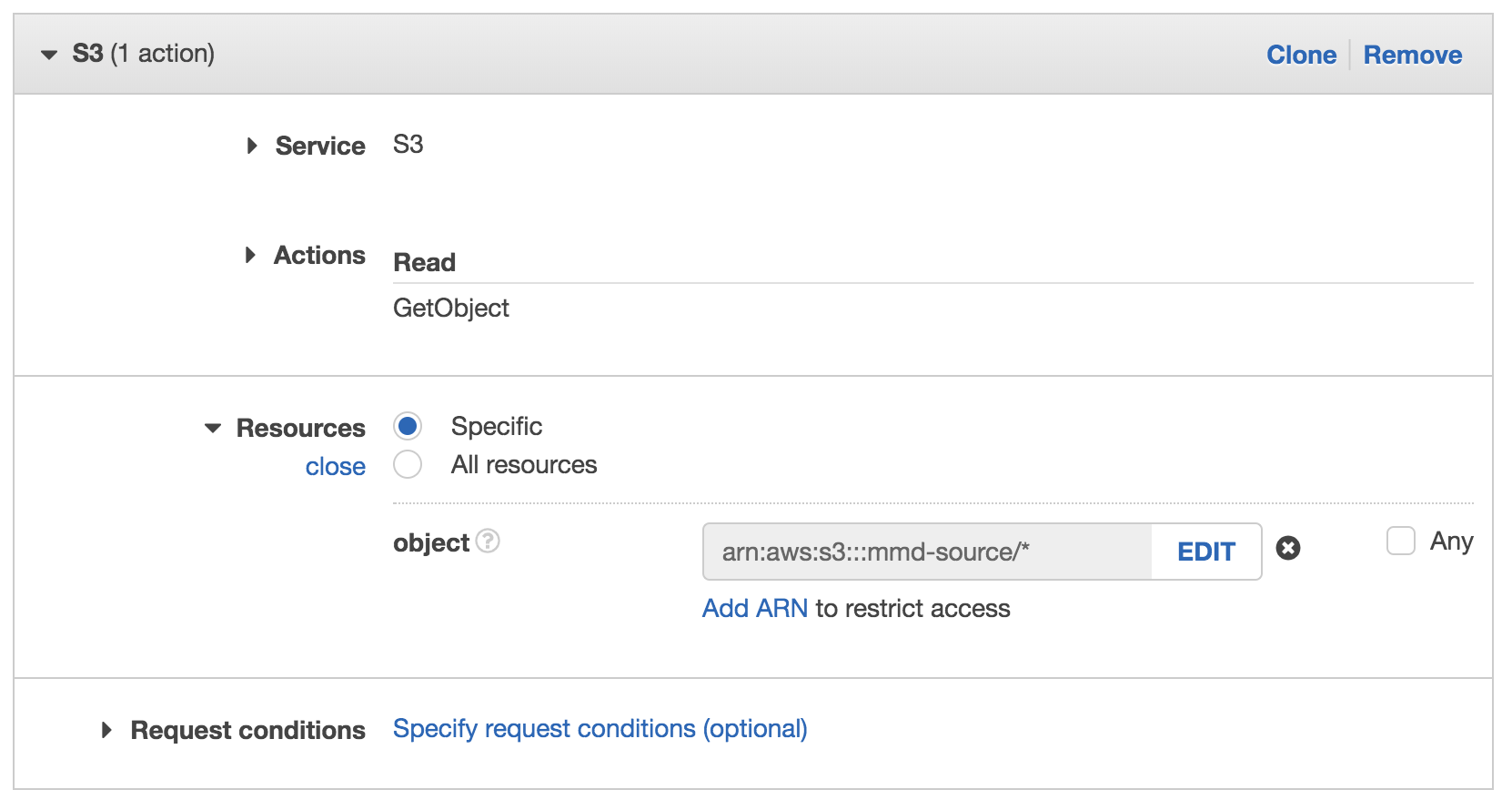

At the moment our Lambda function doesn’t actually have enough rights to read from our source bucket or write to our target bucket. To give it permissions we need to edit the Execution Policy that was automatically created in the previous step.

In the AWS Console, go to IAM and select Roles

Find the execution role for your function

It will be named the same as your function with a random id after it, e.g.

bob-processFile-role-diqmuuv6We need to add 2 inline policies:

- One to read from the source bucket

- One to write to the target bucket

To add permissions, select Add inline policy and use the wizard to select:

S3as the serviceGetObjectas the allowed action<firstname>-demo-sourceas the specific bucket*to allow reading any object within the bucket

Select the JSON view of the policy, it’s actually quite simple, it should look very similar to this:

The only difference should be your bucket name

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::my-source-bucket/*"

}

]

}Select Review Policy

You’d get a warning if anything was wrong here, any errors in a policy mean that the policy grants no permissions!

Give your policy a meaningful name e.g.

<firstname>-S3ReadSourceBucketSelect Create policy

Add another inline policy to allow writing files to the target bucket The only permission required is PutObject and you should restrict it to just the target bucket

5. Coding

Let's write the code!.

Go back to your Lambda function code

Be wary, there are a couple of deliberate bugs in part of this code, if you get stuck ask for help, don’t get frustrated! The steps with bugs are labelled so you’ll know where there might be problems.

- Note that lambda has automatically created a bit of template code for you. Don’t delete this code, it’s needed to make the function work.

import json

def lambda_handler(event, context):

# TODO implement

return {

'statusCode': 200,

'body': json.dumps('Hello from Lambda!')

}- You’ll need to add another import statement at the very top of your function. Change the first line to:

import boto3, json - From now on, all the the rest of the code we write needs to be inside the event handler function:

def lambda_handler(event, context):

# all of our code goes here

# we can add lots of lines of code here

# and add some extra line breaks between each section

# to make it easier to read

print("remember to indent your code when you copy and paste, otherise it won't work properly")

# this bit sends the result back and is the end of our lambda function

return {

'statusCode': 200,

'body': json.dumps('Function has finished')

}- Work out which S3 object in the source bucket has triggered lambda

s3 = boto3.resource('s3')

sourceKey = event['Records'][0]['s3']['object']['key']

sourceBucket = event['Records'][0]['s3']['bucket']['name']

print("s3 object: " + sourceBucket + "/" + sourceKey) - Read the uploaded file from S3 (bug)

obj = s3.Object(a_bucket, a_key)

sourceText = obj.get()["Body"].read().decode('utf-8') - Call comprehend to analyse the text (bug)

comprehend = boto3.client(service_name='comprehend')

sentiment = comprehend.detect_sentiment(Text=sourceText, LanguageCode='en')

keyPhrases = comprehend.detect_key_phrases(Text=sourceText, LanguageCode='en') Build a new object that combines all of the data

Important! Don’t change this bit, the exact format is important for later.

targetData = json.dumps({

'sourceKey': sourceKey,

'sourceBucket': sourceBucket,

'sourceText': sourceText,

'sentiment': sentiment,

'keyPhrases': keyPhrases

}) - Write the results to the target bucket (bug)

newObj = s3.Object(targetBucket, targetKey)

newObj.put(Body=targetData)

print("processed data: "+ targetData) Test your code by uploading a small text file to the source bucket, via the AWS console.

You can see the output of your function in CloudWatch logs.

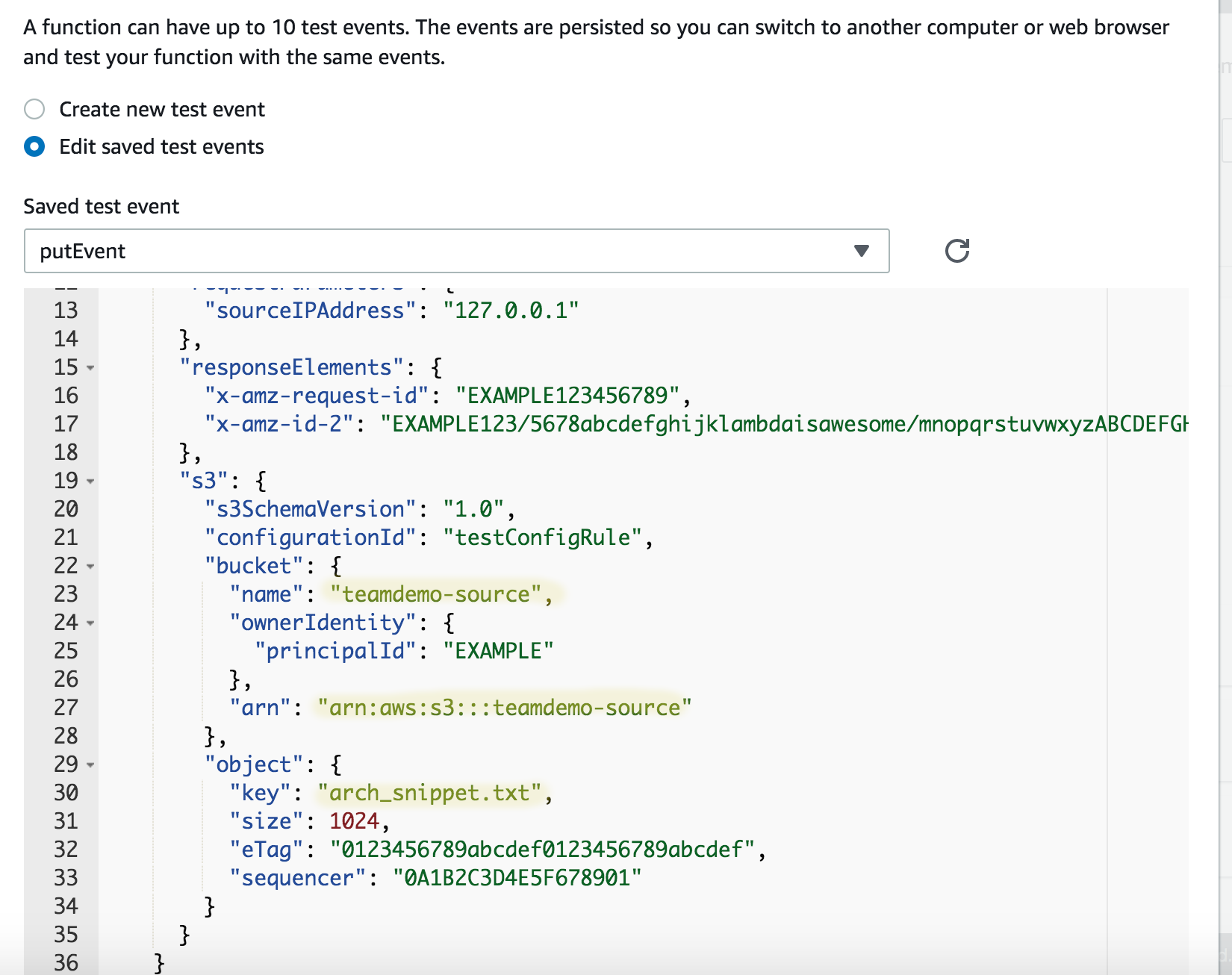

Debugging your function

Because there’s some bugs it won’t work at first. Instead of uploading a file every time you want to test your function, you can create a test event

Use the Select a test event drop-down next to the Test button at the top of the Lambda page

- Select

Configure Test Events - Select

Amazon S3 Putas the template event - Edit the

S3Bucket.Name,S3.arnandS3.object.keyfields to reference your bucket name and the name of the sample file you uploaded earlier - Give your test event a name

- You can now re-run the effect of the file upload by just pressing the Test button

- Select

If it works then you should see a new file in your target bucket which you can open to see the resulting JSON.

6. Send to API

Send the data to an external API.

I’ve exposed an API that you can send your data to, a lambda function will be triggered that will put the data into an ElasticSearch instance, updating the Kibana dashboard.

- Add some new import statements to the top of your function

import urllib

from botocore.vendored import requestsTowards the bottom of your code, after you’ve created all of the target data, but before you’ve sent the lambda return status, add a the following to call the API at

https://api.cloud-architecture.io/sendToElasticSearch?Don’t forget to change your username from mike to your name in the first line!

userparam = 'user=mike'

encodedData = urllib.parse.quote_plus(targetData)

api = 'https://api.cloud-architecture.io/sendToElasticSearch?'

dataparam = '&data='+encodedData

api_response = requests.get(api+userparam+dataparam)- To check that the API is responding properly, you can add some print statements and check the CloudWatch logs

print("api code: " + str(api_response.status_code))

print("api response: " + str(api_response.text))Congratulations!

You’ve completed the workshop on the next page you can see the latest output of the api :)

7. Client

Send a snippet.

Latest snippets:

- 1

- 2

- 3

- 4

- 5